Few-shot prompting has emerged as a powerful technique in natural language processing (NLP), especially in the context of large language models like Claude and GPT-4.

In this guide, we’ll cover everything you need to know about few-shot prompting: its purpose, applications, mechanics, and examples to illustrate its versatility. By the end, you’ll understand how few-shot prompting can be applied across a wide range of tasks and how to craft effective prompts for your needs!

What is Few-Shot Prompting?

Few-shot prompting is a technique used in artificial intelligence, particularly with language models, where the model is provided with a small number of examples (or “shots”) of a task before it generates a response. This method helps the model understand the desired output format and context, improving its performance on similar tasks.

It sits between zero-shot learning (where no examples are given) and fully supervised fine-tuning (which requires extensive labeled data).

How Few-Shot Prompting Works

In few-shot prompting, you present the model with a few instances of input-output pairs that illustrate the task at hand. For example, if you want the model to classify the sentiment of movie reviews, you might provide examples like:

- Input: “I loved the movie!”

Output: Positive - Input: “The plot was boring.”

Output: Negative

By showing these examples, the model learns to generate similar outputs for new inputs based on the patterns it recognizes from the provided examples

Few-Shot Prompting Examples

Here are several examples of few-shot prompting across different tasks to illustrate how this technique can be effectively utilized:

1. Sentiment Analysis

Task: Classify customer feedback as positive, negative, or neutral.

Input: "I absolutely love this product!"

Output: Positive

Input: "This is the worst experience I've ever had."

Output: Negative

Input: "It's okay, not great but not terrible."

Output: Neutral

Input: "Fantastic service and great quality!"

Output: 2. Language Translation

Task: Translate phrases from English to Spanish.

Input: "Hello, how are you?"

Output: "Hola, ¿cómo estás?"

Input: "What time is it?"

Output: "¿Qué hora es?"

Input: "I love to travel."

Output: "Me encanta viajar."

Input: "Where is the nearest restaurant?"

Output: 3. Text Summarization

Task: Summarize a paragraph into a single sentence.

Input: "The quick brown fox jumps over the lazy dog. This sentence is often used to demonstrate the use of all letters in the English alphabet."

Output: "A quick brown fox jumps over a lazy dog, showcasing all letters of the alphabet."

Input: "Artificial intelligence is transforming industries by automating processes and providing insights through data analysis."

Output: 4. Question Answering

Task: Answer questions based on a given context.

Context: "The Great Wall of China is a series of fortifications made of various materials, built to protect against invasions."

Input: "What was the purpose of the Great Wall of China?"

Output: "To protect against invasions."

Input: "What materials were used to build the Great Wall?"

Output: 5. Code Generation

Task: Generate code snippets based on a description.

Input: "Create a function that adds two numbers."

Output:

```python

def add_numbers(a, b):

return a + b6. Creative Writing

Task: Generate a short story based on a prompt.

Input: "Once upon a time in a small village, there lived a kind old man."

Output: "He was known for his wisdom and generosity, always helping those in need."

Input: "In a world where magic exists, a young girl discovers her powers."

Output: These examples demonstrate how few-shot prompting can guide AI models to produce desired outputs by providing context and structure through a limited number of examples. This technique is versatile and can be adapted to various tasks, enhancing the model’s ability to understand and respond appropriately.

How to Do Effective Few-Shot Prompts

The key to success with few-shot prompting is constructing prompts that effectively communicate your desired task to the language model. Here are some best practices to keep in mind:

- Use clear, representative examples. The examples you include should be typical of the inputs the model will see and the outputs you want it to generate. Avoid edge cases or ambiguous examples that could throw the model off track.

- Provide enough examples. While you don’t need a huge dataset, you usually want to include more than one example to give the model a solid understanding of the task. 2-5 examples is often a good range.

- Keep examples concise. The model should be able to quickly grasp the pattern from your examples. Trim any unnecessary details and focus on the core input/output structure.

- Use delimiters. Clearly separate your examples and the final input you want the model to process. A simple delimiter like “—–” works well. This helps the model understand where the examples end and its task begins.

- Experiment with example order. Sometimes the order of your examples can impact results. If you’re not getting ideal outputs, try rearranging the examples in your prompt.

- Fine-tune your prompt over time. As you see how the model responds, you can iteratively refine your prompt to get better and more consistent results. Treat prompt engineering as an ongoing process.

Zero-Shot vs. One-Shot vs. Few-Shot Prompting

You may have heard the terms “zero-shot,” “one-shot,” and “few-shot” in the context of prompting language models. Here’s a quick breakdown of what they mean:

- Zero-shot: In zero-shot prompting, you provide no examples at all. You simply describe the task in natural language and hope the model can figure out what you want from your explanation alone. This works for some very straightforward tasks but often produces lower-quality or less predictable results.

- One-shot: One-shot prompting is providing a single example of the desired input/output. This is a big step up from zero-shot as it gives the model a concrete frame of reference. One-shot can work well for simple tasks but often benefits from a few more examples.

- Few-shot: Few-shot prompting, as we’ve discussed, is including a small handful of examples (usually 2-5) to more robustly specify the task. This is the sweet spot for most applications, providing enough information for the model to reliably produce good outputs without the burden of extensive data collection.

So in summary: zero-shot < one-shot < few-shot in terms of reliability and quality, but few-shot > one-shot > zero-shot in terms of effort required. The right approach depends on your task complexity and data availability.

Advanced Few-Shot Prompting Techniques

As you get more experienced with few-shot prompting, there are some more advanced techniques you can use to eke out even better performance:

- Chain of Thought Prompting – This involves prompting the model to break down its reasoning into a series of steps, e.g., “Let’s solve this step-by-step: First… Then… Finally…” This can lead to more reliable and interpretable outputs for complex tasks.

- Dynamic Few-Shot Prompting – Instead of using the same static examples every time, you can dynamically generate new examples on the fly based on the specific input. This allows for more tailored and relevant prompts.

- Prompt Tuning – A recent technique where you actually fine-tune a small set of special “prompt” tokens while keeping the rest of the language model frozen. This allows for efficient customization without a full fine-tuning process.

- Retrieval-Augmented Prompting – For knowledge-intensive tasks, you can augment your prompt with relevant information retrieved from an external knowledge base. This allows the model to draw on a larger pool of information beyond its training data.

- Ensemble Prompting – Generating multiple prompts for the same input and combining the outputs can sometimes produce better results than a single prompt. You can ensemble by majority vote, averaging, or other methods.

These advanced techniques showcase the wide design space and untapped potential in prompt engineering. As we develop more sophisticated prompting methods, we’ll be able to squeeze even more capability out of language models without expensive fine-tuning.

Benefits of Few-Shot Prompting

There are several key benefits to using few-shot prompting with LLMs:

- Efficiency – It allows you to get an LLM to perform a new task without needing to gather a large dataset or spend time and resources fine-tuning. Just a handful of examples can be enough.

- Flexibility – You can quickly repurpose an LLM for many different tasks by simply modifying the examples you include in the prompt. There’s no need to fine-tune a separate model for each use case.

- Quality – LLMs have such broad language understanding that they can often infer the task extremely well from just a few examples and generate outputs on par with models specifically fine-tuned for that task.

- Simplicity – Constructing a prompt with examples is much more accessible than fine-tuning models, making powerful LLM capabilities available to more users.

- Speed – Generating results via a prompt is nearly instantaneous, whereas fine-tuning a model can take significant time.

Limitations of Few-Shot Prompting

While few-shot prompting is very powerful, it’s not always a complete replacement for fine-tuning. Some limitations to be aware of:

- Lack of Customization – With few-shot prompting, you’re limited to the knowledge and capabilities of the base LLM. Fine-tuning allows you to more extensively customize a model for your specific domain and task.

- Unpredictable Outputs – The model has more leeway to go in unexpected directions compared to a fine-tuned model trained on many examples to produce a narrower range of outputs. Careful prompt engineering is needed for reliable results.

- Inability to Learn New Knowledge – Few-shot prompting does not actually expand what the model knows, it only coaxes it to apply its existing knowledge in new ways. To expand the model’s knowledge, fine-tuning on additional data is needed.

- Lack of Efficiency for High-Volume Use Cases – If you need to apply the model to a task at very high volume, few-shot prompting may be inefficient compared to a dedicated fine-tuned model.

- Potential for Biased or Inconsistent Results – With fine-tuning, you have tight control over the data used to tune the model. With few-shot prompting, biases or inconsistencies in the base model may come through.

Applications of Few-Shot Prompting

Despite some limitations, few-shot prompting has proven to be a very effective and practical technique for many applications. Some common use cases include:

- Content Generation – Few-shot prompts are great for generating product descriptions, ad copy, social media posts, article outlines, poetry, lyrics, and all sorts of other content. Just provide a few examples of the content you want in the desired style and tone.

- Data Enrichment – You can use few-shot prompts to extract specific types of information from raw text, like pulling out company names, identifying key topics, or categorizing sentiment. Give it a few examples of the input text and extracted information you want.

- Text Transformation – Few-shot prompts make it easy to transform text from one format to another, like rewriting a passage to be more concise, changing the perspective from first-person to third-person, translating between languages, or converting unstructured notes into a neatly formatted document.

- Brainstorming & Ideation – By providing a few examples of ideas, LLMs can help generate all sorts of additional creative ideas for products, stories, names, slogans, designs and more.

- Analysis & Insights – With a few examples, you can prompt LLMs to provide all sorts of intelligent analysis, like identifying key themes across customer reviews, extracting important clauses from contracts, or even analyzing code to explain what it does.

The applications are nearly endless. Whenever you have a language-related task that would benefit from the power of a large language model, few-shot prompting is worth considering as an efficient way to get great results.

Addlly’s No Prompt AI

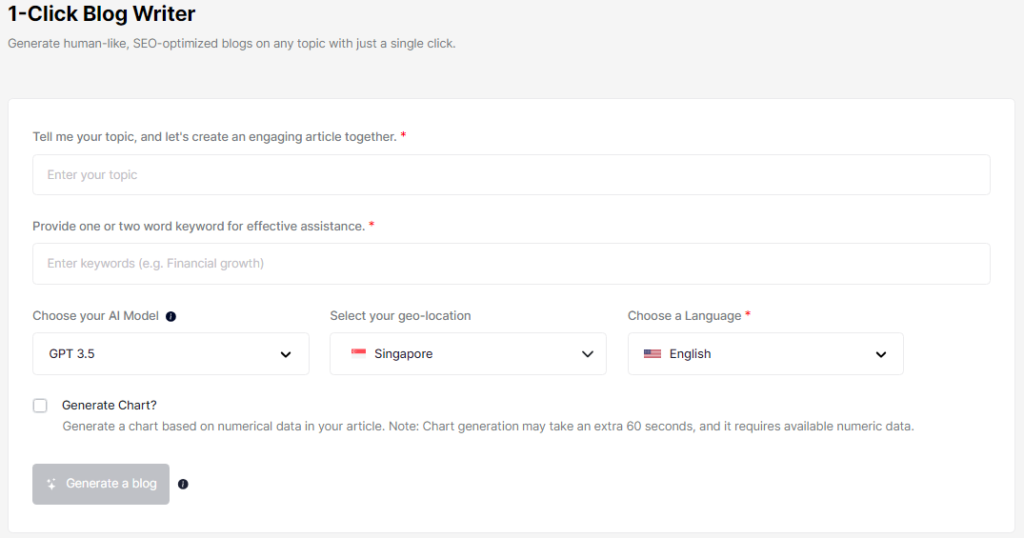

While few-shot prompting is incredibly powerful, it still requires some skill to construct effective prompts with the right examples to get reliable, high-quality results for a given task. This is where Addlly AI comes in.

No Prompt AI is a revolutionary new offering that makes it easier than ever to get the outputs you need from LLMs without any prompt engineering required. Simply provide your input and specify the type of output you want, and No Prompt AI will automatically construct an optimal prompt behind the scenes to generate the best possible results.

For example, if you want to generate a blog with our 1-Click Blog Writer, you would just provide a description of your topic, language and AI model. No Prompt AI has already been trained on SEO best practices, so it knows exactly how to prompt the LLM to generate blogs for any topic.

No matter what language task you’re working on, chances are No Prompt AI can help you get the results you need faster and easier than ever before. It eliminates the need for prompt engineering so you can simply focus on the inputs and outputs that matter for your use case.

Under the hood, No Prompt AI is powered by a combination of:

- Extensive prompt engineering by Addlly’s team to translate each supported task into reliable, effective few-shot prompts

- Fine-tuning of LLMs on curated datasets for each task to further optimize performance

- Ongoing learning and improvement from QA testing and real usage to continuously refine results

The result is an incredibly powerful and flexible AI system that makes the full potential of large language models accessible to everyone. It democratizes access to cutting-edge AI capabilities and empowers users to leverage LLMs for their unique needs with unprecedented ease.

To experience the power of No Prompt AI yourself, head over to our app and create a free account. You’ll get 6 free credits to start generating outputs right away.

Conclusion

To sum up, few-shot prompting is a useful way to make AI language models work better. By giving the AI a few examples, it can understand and do tasks more accurately. This method helps the AI write answers in the right format and handle tricky tasks better than if it had no examples at all.

However, few-shot prompting isn’t perfect. It can still struggle with very complex problems. Scientists are working to make it even better.

Even with its limits, few-shot prompting has shown good results in real-life use. Studies show that when set up right, it can work well even with just a few examples.

As AI keeps getting smarter, few-shot prompting will likely stay an important tool. It will help make AI systems better at learning quickly just like humans do.

Author

-

As an SEO Marketing Specialist at Addlly.ai, my expertise lies in optimizing online visibility through strategic keyword integration and engaging content creation. My approach is holistic, blending advanced SEO techniques with compelling storytelling to enhance brand presence. A true SEO nerd at heart, you'll often find me frequenting the latest SEO blogs, devouring industry insights and soaking up the latest news.

View all posts